What is Ethical AI?

Ethical AI refers to the design, development, deployment, and governance of artificial intelligence systems in a way that aligns with core human values, such as fairness, accountability, transparency, and privacy. It ensures that AI decisions are explainable, inclusive, non-discriminatory, and respectful of legal and societal norms. In 2025 and beyond, ethical AI is not just a principle—it’s a performance standard, a regulatory requirement, and a business imperative.

Why It Matters Now: From Innovation to Responsibility

We are entering an era where AI no longer assists decisions—it makes them. From credit scoring and job filtering to medical diagnoses and predictive policing, AI increasingly impacts people’s lives in tangible, sometimes irreversible ways. Yet, many models remain opaque, biased, or trained on data lacking consent.

Ethical AI addresses this widening gap between technical capability and human responsibility. In today’s regulatory climate—shaped by the EU AI Act, the U.S. Executive Order on AI, and India’s DPDP Act—enterprises can no longer treat fairness, explainability, or accountability as “nice to have.” They’re the price of admission for trust, adoption, and long-term AI viability.

Key Dimensions of Ethical AI

- Fairness: Preventing algorithmic bias based on race, gender, income, or geography, and ensuring that training data reflects the diversity of the real world.

- Transparency & Explainability: Making sure that AI decisions can be understood and challenged by stakeholders, including regulators, customers, and the people affected.

- Privacy & Consent: Embedding privacy-by-design and ensuring individuals retain control over how their personal data is used in AI training or inference.

- Accountability & Governance: Defining clear responsibility for AI outcomes across design, deployment, and oversight layers—whether human or machine.

- Security & Robustness: Ensuring AI systems are resilient to adversarial attacks, model poisoning, or data drift, especially when deployed in high-stakes environments.

From Guardrail to Growth Driver: The Strategic Inflection Point of Ethical AI

Ethical AI has moved beyond philosophy—it now sits at the intersection of regulatory obligation, competitive positioning, and digital trust. Global mandates like the EU AI Act, India’s DPDP, and U.S. executive orders are enforcing impact assessments, transparency protocols, and algorithmic accountability as baseline requirements. At the same time, consumers and enterprise buyers alike are demanding clarity: Why was this decision made? Is it fair? Is it explainable?

In this high-stakes environment, ethical AI is no longer a defensive mechanism—it’s a proactive lever of trust and differentiation. Organizations that embed ethics into the fabric of their AI models, workflows, and governance practices are not just mitigating risk; they are positioning themselves as market leaders in industries where transparency, fairness, and accountability are fast becoming sources of long-term brand value and customer loyalty.

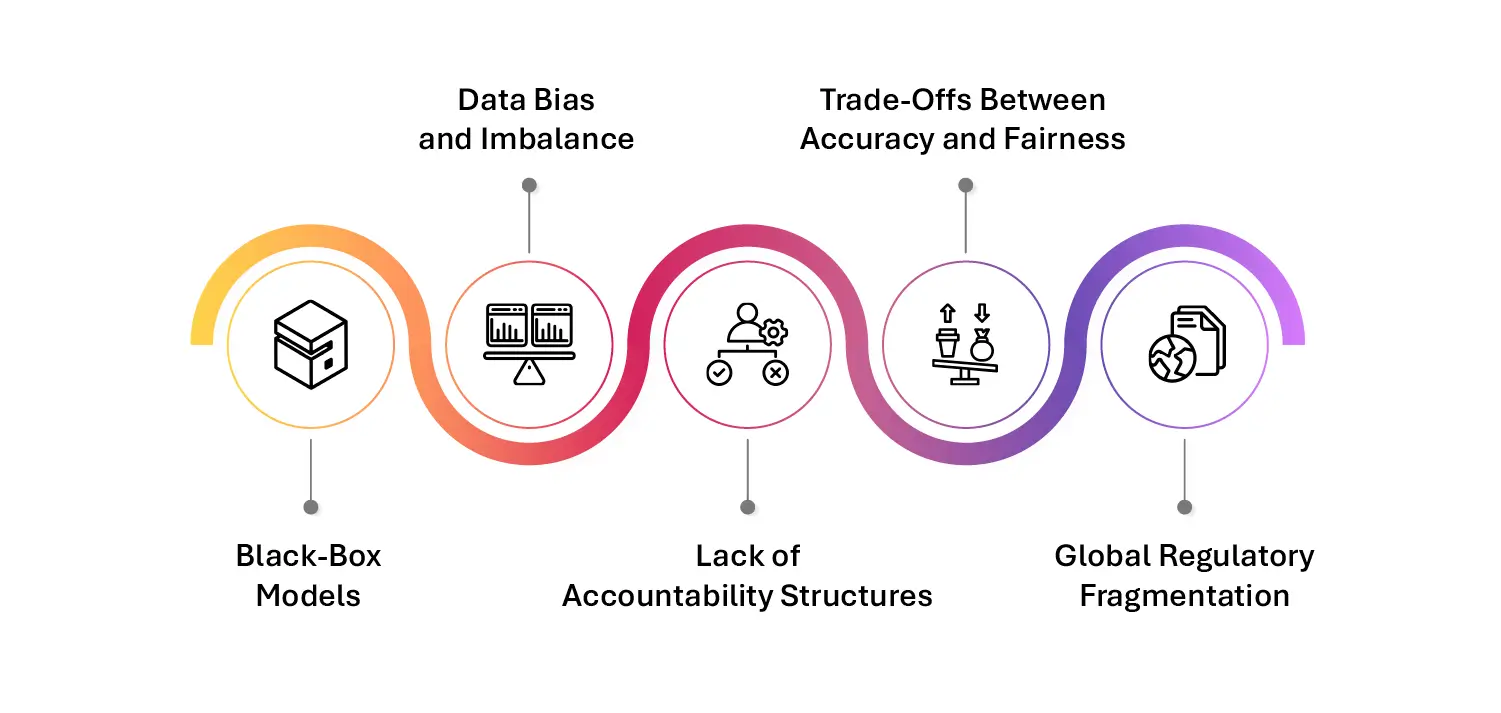

Challenges to Ethical AI Adoption

- Black-Box Models: Deep learning and generative models are often difficult to interpret, making it hard to explain decisions to users or regulators.

- Data Bias and Imbalance: Historical data may encode societal biases, which get amplified by AI systems if not carefully mitigated.

- Lack of Accountability Structures: Many organizations lack clear ownership of AI outcomes, leading to ethical blind spots and risk diffusion.

- Trade-Offs Between Accuracy and Fairness: AI developers often face tensions between model performance and ethical safeguards, especially when deploying at scale.

- Global Regulatory Fragmentation: Differing standards across regions (EU vs. US vs. MENA) make it difficult to establish unified, compliant ethical frameworks.

Solutions: Operationalizing Ethical AI by Design

- Embed Ethics in the AI Lifecycle: From data sourcing and model design to deployment and feedback loops, bake in ethical checkpoints at every phase.

- Adopt Model Auditing and Governance Platforms: Utilize AI observability tools to surface bias, drift, anomalies, and explainability gaps in real-time.

- Practice Federated Data Governance: Decentralize AI data pipelines while ensuring role-based access, consent tracing, and jurisdiction-specific policy enforcement.

- Implement Ethical Risk Scoring Systems: Score AI models based on risk exposure (e.g., to bias, privacy, explainability), and tie model deployment to approval workflows.

- Train Teams in AI Ethics: Establish cross-functional ethics committees with AI practitioners, compliance officers, legal counsel, and domain experts.

The Future of Ethical AI: From Reactive to Proactive

The ethical AI of tomorrow will be autonomous, self-governing, and preemptively compliant. AI engines will monitor themselves for fairness violations, flag unintended outcomes, and auto-adjust model parameters based on pre-set ethical boundaries.

But ethics can’t be fully automated—it requires intent, oversight, and human-in-the-loop mechanisms. Enterprises that balance automation with accountability will be the ones that not only deploy AI successfully but also do so sustainably.

Getting Started with Data Dynamics:

- Learn about Unstructured Data Management

- Schedule a demo with our team

- Read the latest Blog: The Sovereign AI Paradox: Building Autonomy Without Breaking the Business