What is Statistical Sampling?

Statistical sampling is a method of selecting a subset of data from a larger dataset to estimate characteristics or draw conclusions about the entire population. It’s a foundational technique used across auditing, analytics, compliance, and data science when analyzing every single record is impractical or resource-intensive.

Rather than examining every data point, statistical sampling allows for high-confidence inferences by analyzing a smaller, representative portion, enabling faster insights, reduced costs, and scalable decision-making.

Why Statistical Sampling Matters

In an era of big data, where enterprises process millions of files and transactions daily, full-population analysis isn’t always feasible. Statistical sampling offers a practical, defensible alternative—especially in areas like internal audits, regulatory reviews, AI model validation, and data quality checks. When applied correctly, statistical sampling improves efficiency without sacrificing accuracy.

It also offers legal defensibility in regulatory environments, allowing organizations to demonstrate due diligence and compliance using established sampling frameworks.

Types of Statistical Sampling

- Random Sampling: Every item in the population has an equal chance of selection. Ideal for unbiased estimation.

- Stratified Sampling: The population is divided into strata (groups) based on characteristics, and samples are drawn from each. Improves accuracy when data is heterogeneous.

- Systematic Sampling: Every nth record is selected after a random start. Useful for structured datasets.

- Cluster Sampling: Entire groups (clusters) are randomly selected, and all or some members are analyzed. It is often used in geographically dispersed populations.

- Judgmental or Non-Statistical Sampling: Based on expert judgment rather than random selection—used when statistical techniques are not feasible.

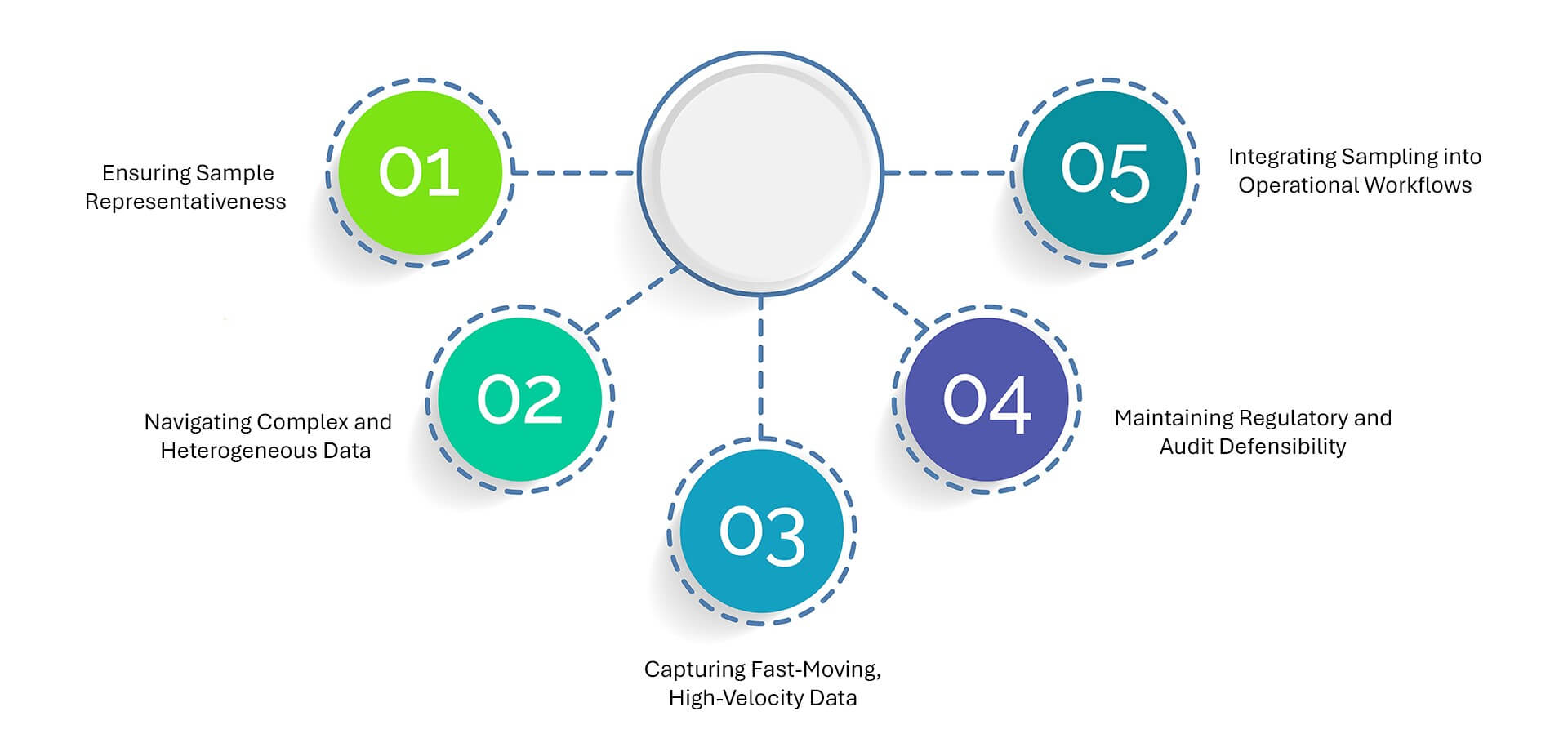

Key Challenges in Statistical Sampling

1. Ensuring Sample Representativeness

One of the most critical challenges in statistical sampling is ensuring that the selected sample truly reflects the diversity of the entire dataset. A poorly chosen sample can lead to skewed insights, flawed assumptions, and misleading results.

What to do:

Leverage automated, randomized sampling algorithms to eliminate human bias and ensure proportional representation across key variables. Where data is unevenly distributed, apply stratified or weighted sampling techniques to maintain accuracy.

2. Navigating Complex and Heterogeneous Data

Enterprise data is rarely uniform. It spans structured, semi-structured, and unstructured formats across multiple systems. Sampling this kind of complexity requires more than random selection—it demands contextual understanding.

What to do:

Use data profiling tools to assess distribution, format, and completeness before sampling. Apply data transformation or normalization techniques to create a unified sampling base, and ensure that unstructured elements (like emails or logs) are parsed and indexed appropriately.

3. Capturing Fast-Moving, High-Velocity Data

In environments such as fraud detection, e-commerce, or real-time monitoring, patterns evolve rapidly. Static samples quickly become outdated, increasing the risk of missing critical anomalies or shifts in behavior.

What to do:

Implement dynamic or rolling sampling techniques that refresh samples at predefined intervals. Combine sampling with real-time analytics or anomaly detection systems to continuously flag deviations even beyond the sampled data.

4. Maintaining Regulatory and Audit Defensibility

In compliance scenarios, the legitimacy of decisions made using sampled data may be questioned if the sampling methodology lacks rigor or transparency.

What to do:

Adopt standards-based sampling frameworks and document the entire process—sampling logic, confidence intervals, margin of error, and sample size justification. Ensure audit logs are maintained for every sampling event.

5. Integrating Sampling into Operational Workflows

Sampling is often treated as an isolated task rather than a continuous part of governance, analytics, or AI pipelines, limiting its impact and repeatability.

What to do:

Embed sampling into automated data workflows using API integrations with data lakes, governance tools, and audit platforms. Trigger sample-based reviews at key workflow stages—such as during ingestion, transformation, or model training—for ongoing oversight.

Scaling Trust in Data: The Strategic Role of Statistical Sampling in Modern Enterprises

As enterprises become increasingly data-driven, statistical sampling is emerging as a critical enabler, not just of efficiency, but of trust. It serves as a bridge between scale and scrutiny, allowing organizations to assess, validate, and govern massive datasets without the burden of full-population analysis.

In AI development, sampling helps verify the quality, diversity, and fairness of training data. It enables teams to test assumptions, detect bias, and validate outputs, without needing to audit every data point. This is especially crucial as models evolve, data volumes surge, and regulators call for explainability and accountability in algorithmic decision-making.

In audit and compliance operations, sampling transforms how internal controls are tested. Instead of exhaustive reviews, teams can focus on statistically significant subsets that yield high-confidence insights—streamlining reporting, reducing overhead, and ensuring defensibility. Sampling is often the only practical way to prove ongoing compliance in high-volume, real-time environments like financial transactions or healthcare records.

Data governance, too, is increasingly embracing sampling as a quality assurance mechanism. From data integrity checks to metadata validation, statistical sampling ensures organizations can maintain oversight of sprawling data estates without slowing innovation.

Ultimately, statistical sampling isn’t just a mathematical tool—it’s a strategic function. It enables organizations to scale intelligence, enforce accountability, and maintain operational agility in a world where data volume will only continue to grow.

Getting Started with Data Dynamics:

- Learn about Unstructured Data Management

- Schedule a demo with our team

- Read the latest blog: Data Sovereignty Is No Longer a Policy Debate. It’s the New Rulebook for Digital Governance