Julia Roberts, a popular American actress, has reportedly insured her Smile for $30 million, and Cristiano Ronaldo has insured his legs for $144 million. Bizarre right?

As strange as it may sound, the insurance industry has boomed in the past few years, and now you can insure just about everything you own.

However, this new-age insurance game is becoming increasingly digital.

Over the next decade, the insurer will be fully geared with the technologies such as cloud, data analytics, artificial intelligence, machine learning, and blockchain for driving operations, providing improved customer experience, and inducing trust and transparency in the process.

Despite the picture looking merry from afar, the current digital scenario in the industry needs a lot of improvements and modernization. Rapidly changing customer demands, extreme price competition, and the growing cost of losses are some of the biggest risks for insurance providers moving slowly to the digital world.

Reality Check for Digital Insurance.

New entrants in the industry, insurtechs, are backed with new technologies, funding, and vision to drive product innovation, reduce costs, and improve scalability, which poses a threat to established and large industry players. The new entrants are enticing customers with a better value proposition by offering cloud-enabled and data-driven products. Digital insurance is gaining popularity, and these innovative products are becoming mainstream, especially after the COVID pandemic. Meanwhile, established insurance companies find it difficult to keep up with the rapid changes.

According to Global Insurtech Market – Growth, Trends, and Forecast, global insurtech market revenue was valued at $5.48 billion in 2019 and is expected to reach $10 billion to $14 billion by 2025, growing at a compound annual growth rate (CAGR) of 10.8% between 2019 and 2025.

The Insurance Industry is All About Data!

Traditional insurance companies are embracing new technologies to drive the transformation of their businesses, primarily based on cloud computing. Fuelled by an urgency to join the bandwagon and step into the league of digital insurance, these established players are making some very bad decisions.

Companies are making the mistake of moving everything all at once into the cloud, which leads to migrating uncentralized and unstructured data, making it impossible to derive intelligence from it. Furthermore, the traditional lift-and-shift operations without any analytics add to the complexity, cost, and risk in the cloud. Abnormalities, errors, risks, duplicate, or unimportant elements of the legacy infrastructure will now be stored in the cloud, exposing a wide variety of vulnerabilities that may lead to a breach.

The solution to this is to harness the power of analytics from existing data assets and leverage it to transform digitally. Understanding the data residing within the system before moving to the cloud is the first step to avoiding the mess later on and simplifying the process. Once enterprises understand the data, they can easily classify and tag data files and make the right choices in selecting the cloud solution and optimizing storage by distinguishing cold data from hot data. This can impact IT spending by reducing the cloud cost significantly. Additionally, organizations can easily identify risk-prone, personal, and sensitive data. This data can then be secured, protected, and governed to avoid any risk.

Let’s take a closer look at why insurance is all about data and why data has become one of its biggest assets. Here are the biggest data points in the insurance industry value chain:

- Customer information: The most important data set where customer details are recorded from policy documents, personal information, address proofs, and personal ids, claim documents.

- Asset Information: This includes the Data related to the insured asset and related laws and information.

- Policy and claim management: This might include the various versions of policies and claims processing and can vary from customer to customer.

- Estimation data: the insurer calculates the risk of the insurance and associated losses. This is traditionally done manually by considering multiple factors: historical Data, behavioral data, non-insurance data, and more.

This is just the tip of the iceberg, as various hidden data points in the insurance industry go unnoticed during manual analysis. This results in enormous amounts of data, which organizations typically store in data lakes. In data lakes, the data is duplicated, unsupervised, and unutilized, adding a number of challenges such as lack of data knowledge, data sharing with access control, and inducing security risks.

What is a data lake? – A data lake is a repository that allows you to store all your structured and unstructured data at any scale.

Aggregating these data lakes into a centralized data ocean becomes crucial for ensuring data consolidation and robust privacy & security of personally identifiable information (PII). Data oceans exist in the cloud. Data management in a data ocean is a one-time activity instead of multiple rounds of management, analysis, security, and compliance checks across numerous data lakes.

What is a data ocean? DataOceans offers one comprehensive platform that optimizes your data to deliver more effective communications across your enterprise and better customer experiences & business outcomes, enabling long-term growth.

Read this interesting article to learn more about moving away ‘From Multiple Data Lakes to a Single Data Ocean in the Cloud – An evolution that could change how insurance companies manage data for more information.’

So here’s the million-dollar question – What is the most efficient and effective way to migrate to the cloud instead of the traditional lift and shift ones? Especially when insurance companies are dealing with enormous sprawls of unstructured data. What’s the silver bullet here?

The insurance industry’s guide to accelerating cloud migrations: Six golden rules

- Categorize hot and cold data based on metadata analytics

Categorize your data into hot and cold data based on file ownership, time of creation, last accessed, type & size. Custom tagging can help categorize the data by allowing you to quickly and easily create custom information about a file or document and then apply it to a dataset or the object being created. It enables you to type in a keyword and quickly have a listing of thousands of matching documents. Custom tagging shortens research time from days or, sometimes months to a matter of seconds and enables better decision-making, reporting, and optimization.

- Identify PII or sensitive data before moving to the cloud

Analyze unstructured data file content to ensure maximum accuracy in PII/PHI/sensitive data discovery using advanced, multi-level logical expressions and a combination of logical operators. Data analytics and pattern recognition, assisted by AI, can help companies identify Personal Identifiable Information (PII) that can put companies at risk if it is disclosed. Thus, PII/sensitive data can be discovered with greater accuracy, and sensitive data can be protected with optimum remediation.

- Protect sensitive data from unauthorized access and misuse

Scan and analyze your environment for data that can potentially expose your employees and customers to risk. After identifying the business’s critical data, protect it from unauthorized access by excluding public access. With robust and multi-approver remediation workflows, this risk of sensitive data access and misuse can be reduced.

- Quarantine your sensitive data in a secure and easily accessible location

The remediation analyst can choose to quickly and securely move the reported sensitive data to a more secure and accessible storage location from the current storage. Sensitive files may be moved from one file-share to another file-share & a file-share to an object-store location, including NFS and SMB-based storage technologies. The air gap provided by Quarantine, with no means to access those files, helps you to prevent ransomware attacks on critical files while providing immediate protection.

- Assess Industry Compliance (GDPR, HIPPA, CCPA)

Identify data sets that contain files associated with regulatory requirements using Context analysis. This helps with actionable data-driven insights and reports that allow data custodians to decide what data to store, how to store it, and what level of access and use is appropriate with the individual’s consent. Enterprises can utilize readymade templates using third-party providers or create their own compliance templates based on their specific requirements. This identification is the first critical step in the process of remediating and meeting regulatory requirements.

- Enable audit trails

Auditing creates an immutable record of changes performed on the sensitive data files. Files can be classified and tracked utilizing blockchain technology to create an immutable audit report that can be utilized for regulatory and internal data governance. The blockchain keeps a record every time the file is accessed or modified, providing a digital chain of custody for files with critical business information. A secure file sharing environment across business units or even outside the enterprise can be built on immutable audit reporting.

The Data Dynamics Advantage

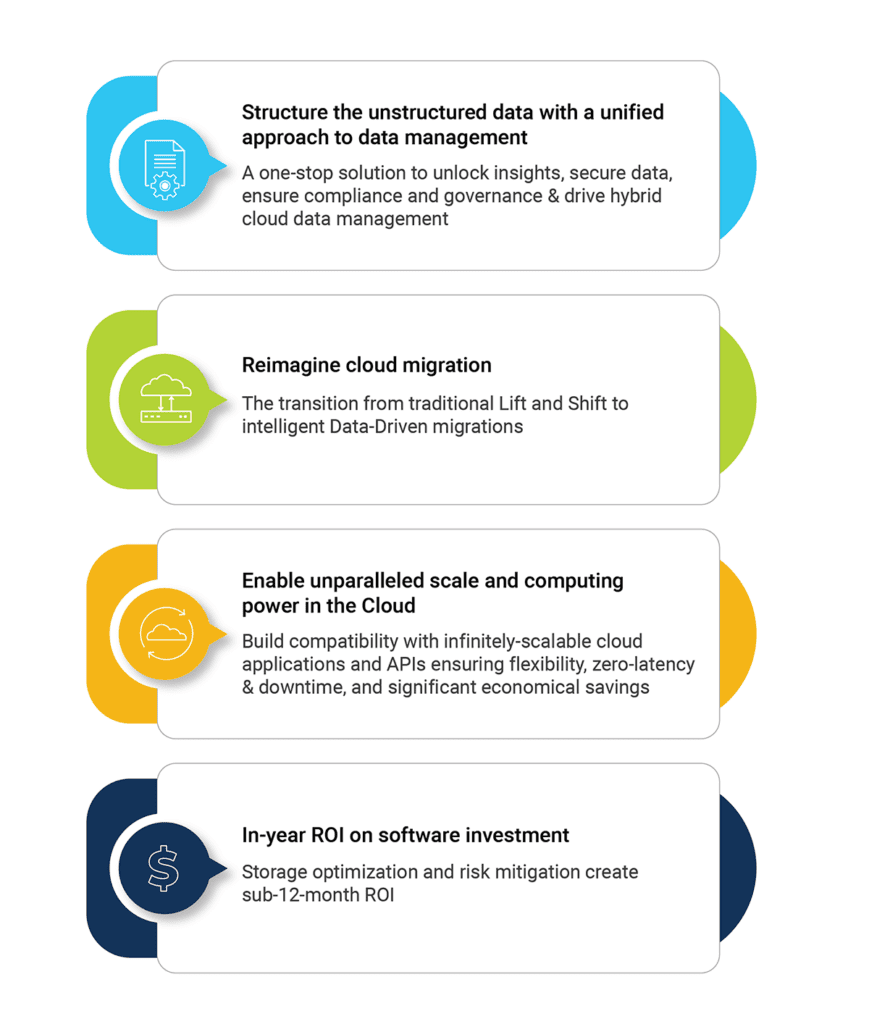

Data Dynamics is a leading provider of enterprise data management solutions via its Unified Unstructured Data Management Platform. Currently utilized by over 25% of the Fortune 100, the platform blends automation, AI, machine learning, and blockchain technologies, allowing organizations to utilize a single software platform to unlock insights, secure data, ensure compliance and governance & drive hybrid cloud data management.

For us, it’s all about:

We follow four core practices to ensure efficient and effective cloud adoption:

As a part of our ‘accelerating cloud adoption’ objective, we have recently collaborated with Microsoft Azure to accelerate cloud adoption at zero license cost for the migration. Organizations can now migrate their unstructured files and object storage data into Azure at zero additional cost to the customer and no separate migration licensing. Migrations are automated and ensure minimum to no risk with automatic access control and file security management. Click here to know more about the program.

Click here to check out how Data Dynamics utilized the Azure File Migration Program to help one of the world’s seven multinational energy “supermajors” Fortune 50 companies accelerate its net zero emission goals while driving digital transformation.

Visit – www.datadynamicsinc.com. Contact us solutions@datdyn.com or click here to book a meeting.