Highlights:

- The energy sector recognizes the immeasurable value of data influx as the catalyst for optimizing energy production, enhancing sustainability, and making informed decisions. Data is not just a byproduct but a strategic asset, paving the way for a greener, more reliable energy future.

- Data Science Studios represent the epicenters of innovation in the energy sector. They bridge the gap between human intuition and AI, promoting data-driven sustainability and ushering in a smarter, more resilient, and sustainable energy future.

- Building an efficient Data Science Studio is not just about the technology and the experts; it’s about the data that fuels the innovation. So, as companies embark on their data-driven journey, they must recognize the critical importance of this prelude – effective data management.

In this Blog:

The energy sector is on the cusp of a remarkable transformation driven by a deluge of data. This revolution transcends the traditional roles of data collection and storage; it’s a paradigm shift that promises to reshape the entire industry. Data is no longer just a byproduct; it’s a strategic asset, and Data Science Studios are emerging as the command centers for this data-driven transformation. Let’s delve deeper to grasp its significant influence and the prelude to establishing an effective and efficient data science ecosystem.

The Emergence of Data Science Studios: A Paradigm Shift in Energy Transformation

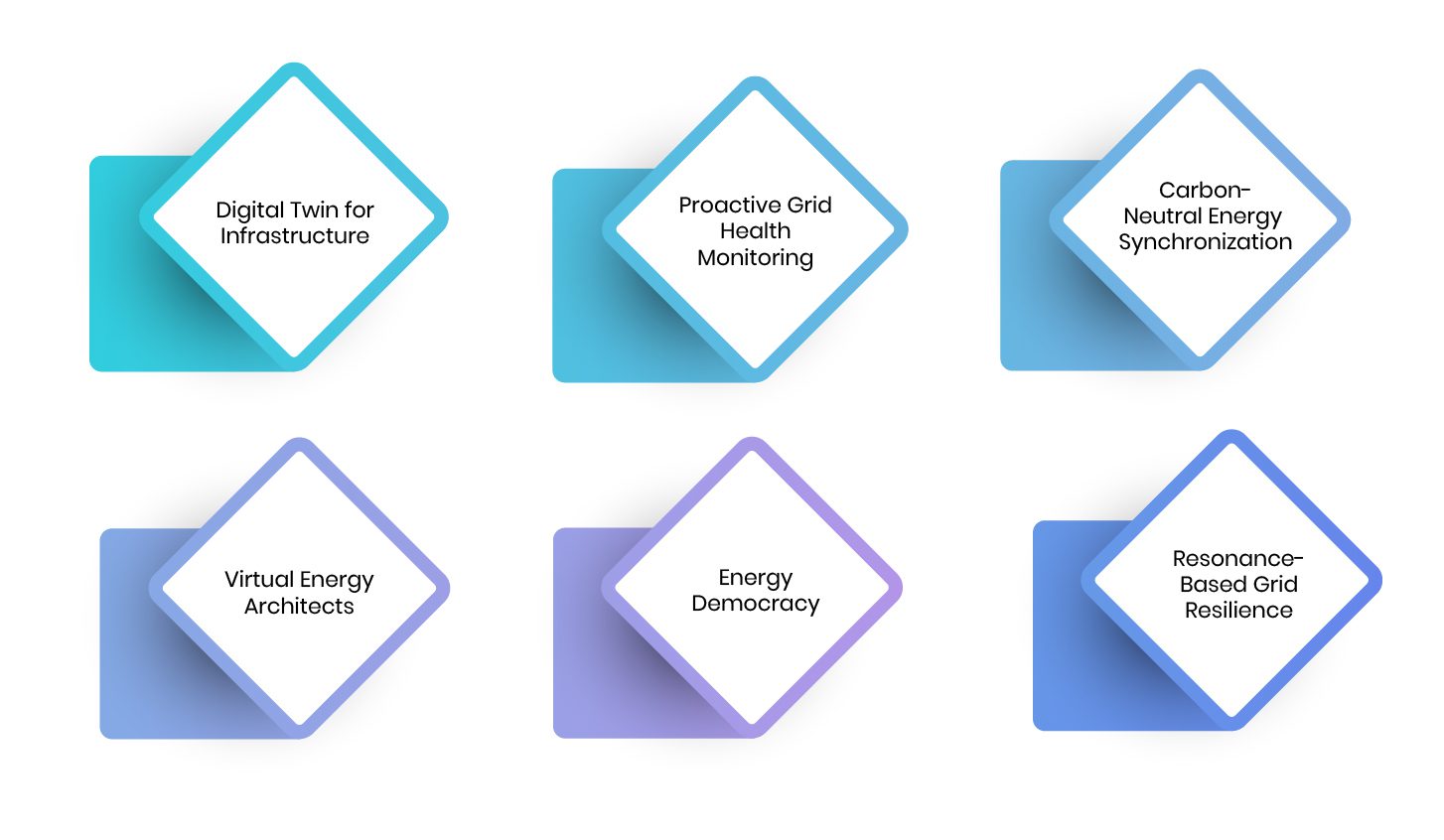

In the energy sector, the emergence of Data Science Studios represents a seismic shift in how we harness and leverage data, transcending the boundaries of conventional data analytics. These dynamic hubs are not merely data repositories; they are epicenters of innovation, poised to revolutionize the industry. Let’s explore six innovative facets of Data Science Studios that are redefining the energy landscape:

- Digital Twin for Infrastructure: Data Science Studio extends its capabilities to create digital twins for critical infrastructure components. Using high-fidelity 3D modeling and real-time data integration, these digital twins mirror the physical infrastructure. They provide engineers with a dynamic and comprehensive view of infrastructure health, facilitating predictive maintenance, early fault detection, and performance optimization. These digital twins not only enhance the reliability of energy infrastructure but also pave the way for a new era of proactive infrastructure management. A survey by PwC found that organizations that use digital twin technology report a 35% reduction in operational costs and a 45% increase in operational efficiency.

- Proactive Grid Health Monitoring: Data Science Studio utilizes a network of IoT sensors with high-resolution imaging capabilities. These sensors continuously capture infrared and thermal data, detecting subtle temperature variations across power lines and substations. Advanced machine learning models analyze this data in real-time, enabling predictive maintenance by identifying areas prone to overheating and potential faults. A report by Navigant Research indicates that predictive grid maintenance can reduce outage durations by up to 60% and lower maintenance costs by 10%.

- Carbon-Neutral Energy Synchronization: Data Science Studio relies on complex optimization algorithms, such as Mixed-Integer Linear Programming (MILP), to synchronize energy sources. These algorithms consider factors like energy production rates, storage capacities, weather forecasts, and carbon emissions data. The platform then orchestrates the optimal combination of energy sources to minimize carbon emissions, ensuring a nearly carbon-neutral energy supply. The International Energy Agency (IEA) reports that by optimizing energy source synchronization, the integration of renewables into the grid can reduce global CO2 emissions by 70% by 2050.

- Virtual Energy Architects: AI-driven virtual architects are empowered by Reinforcement Learning (RL) algorithms. RL agents continuously learn and adapt by interacting with the grid and observing outcomes. They use state-of-the-art deep reinforcement learning models to make real-time decisions about energy distribution, ensuring grid stability and optimizing energy utilization. A study published in Nature highlights how AI-driven grid management can lead to a 20% increase in energy efficiency and a 15% reduction in energy waste.

- Energy Democracy: Data Science Studio integrates blockchain technology to securely and transparently share real-time energy consumption and generation data with consumers. Smart contracts enable consumers to access their data and make energy-related decisions, such as shifting usage to off-peak hours. The blockchain ensures data integrity and security. According to a survey by Accenture, 62% of consumers are more likely to choose a utility company that provides real-time data on their energy consumption.

- Resonance-Based Grid Resilience: This innovation involves implementing specialized tuned mass dampers in critical grid components. These dampers consist of sensors, actuators, and real-time control systems that analyze grid vibrations. When vibrations exceed safe limits, the dampers adjust their oscillations to counteract the disturbances and prevent cascading failures, much like seismic dampers in architecture. The IEEE Power and Energy Technology Systems Journal reports that implementing tuned mass dampers can reduce grid disruptions by 80% during extreme weather events or cyberattacks.

Data Science Studios are modern hubs of discovery, driven by advanced analytics and the magic of machine learning, promise to revolutionize industries, with the energy sector leading the charge. Yet, behind the scenes of these high-tech sanctuaries, lies an uncharted prelude, the unsung hero – the often underestimated art and science of data management.

Data Management: The Uncharted Prelude

Imagine a Data Science Studio as a bustling laboratory, filled with cutting-edge tools and a team of experts, each fueled by curiosity to unlock the hidden value within the labyrinth of data. It’s a place where data alchemy is practiced every day, with the aim of turning raw data into valuable insights. But within this laboratory, there’s a fundamental, often overlooked component: a foundation of well-organized, pristine, and easily accessible data. Without this crucial backdrop, the laboratory is like a library without a catalog, a puzzle with missing pieces.

Data management, though often in the shadows, plays the role of the invisible conductor in this symphony of data that data scientists are poised to create. It’s the prelude, setting the stage for innovation and efficiency. Just as a conductor guides a symphony from chaos to harmony, data management orchestrates the journey from raw data to insightful revelations, ensuring that the data is not just accessible but also reliable, accurate, and secure.

The Challenge: Taming the Data Beast

In the ever-evolving landscape of the energy industry, data flows in from diverse sources at an astonishing rate. The sheer volume, variety, and velocity of this data deluge are nothing short of staggering and adding to this complexity is the surge of unstructured and dark data. Unstructured data, encompassing sources like social media posts, emails, images, and more, doesn’t conform neatly to structured databases, making it a puzzle that defies traditional solutions. According to IDC, unstructured data constitutes over 80% of the world’s data and is expanding at a rate of 55-65% annually and Gartner predicts that by 2025, 95% of new healthcare data will be unstructured. Dark data, on the other hand, represents a trove of information collected by organizations but remains underutilized. This leads to challenges on multiple fronts:

- Data Silos: In the contemporary energy sector, data silos pose a significant technical challenge. These silos emerge due to disparate data storage systems, each with its own unique formats and protocols. As a result, data becomes compartmentalized, hindering seamless data sharing and access. This issue limits the comprehensive analysis of data, making it difficult to gain a unified view of critical information.

- Data Quality: Technical problems with data quality are common, often resulting from inconsistent data entry, data duplication, and incomplete records. Such issues hinder the reliability of data-driven decision-making, leading to misguided conclusions and ineffective strategies, affecting the efficiency and effectiveness of operations.

- Data Security: Ensuring robust data security is a paramount concern in a world prone to cyber threats and data breaches. Challenges arise in implementing security measures, including encryption, access controls, intrusion detection systems, and audits. Failing to address data security adequately can result in unauthorized access to sensitive data, putting both customers and the organization at risk.

- Integration Challenges: Challenges related to data integration are pervasive. Merging data from various sources with different formats and structures requires advanced technology, including ETL (Extract, Transform, Load) processes, data mapping, and integration platforms. Insufficient integration can lead to data gaps, inhibiting a complete and accurate understanding of the data landscape and fragmented decision-making.

- Scalability: With the exponential growth of data, organizations encounter technical hurdles related to data management. Scalability problems can lead to system performance issues and operational inefficiencies. To address this issue, organizations need scalable data storage and processing solutions, such as distributed databases and cloud-based platforms. Without these technical solutions, managing large data volumes becomes challenging and can impede operations.

- Compliance: Navigating the complex web of data regulations and industry standards is a significant technical challenge. Failure to comply can lead to legal and reputational consequences. Achieving compliance involves implementing precise technical measures, such as data governance frameworks, data retention policies, and privacy protection mechanisms. Without these measures, organizations risk non-compliance and the associated legal and reputational risks.

The Combat Strategy: Forging a Unified Data Management Strategy

The concept of a “combat strategy” suggests a proactive and intentional approach to addressing the complexities and difficulties of data management. Crafting a unified data management strategy is comparable to outfitting an organization with a comprehensive battle plan. This entails aligning diverse data management practices, spanning data acquisition and storage to processing, analytics, and governance, into a coherent and harmonious framework. The objective is to dismantle data silos, guaranteeing data accessibility, security, and actionable insights, ultimately empowering the organization to make well-informed decisions and gain a competitive advantage. Here are ten techniques that you can implement to establish a unified and effective data management practice:

- Cognitive Data Catalogs: These catalogs leverage natural language processing (NLP) and machine learning algorithms to analyze data assets, user interactions, and context. Using NLP, they understand the semantic content of data and user queries, perform sentiment analysis, and employ content clustering. Recommendations are generated through collaborative and content-based filtering, enriched by user behavior analysis, resulting in an intelligent data retrieval experience.

- Self-Healing Master Data Management (MDM): Next-gen MDM systems utilize AI for data quality assurance. They employ algorithms for data profiling, anomaly detection, and data matching. AI models like decision trees and neural networks autonomously identify and resolve data conflicts while predicting potential issues. This minimizes manual intervention and utilizes probabilistic record linkage and deduplication techniques for accurate data.

- Data Visualization with Metadata and Context Analytics: Advanced data visualization tools integrate metadata and context analytics, leveraging AI-driven algorithms to create interactive and dynamic data representations. AI automatically extracts and categorizes metadata, enabling users to explore data in its context. Machine learning algorithms identify relationships, patterns, and anomalies, providing deeper insights for informed decision-making.

- Augmented Data Quality Assurance: Modern data quality tools incorporate AI-driven anomaly detection using techniques such as clustering, regression analysis, and autoencoders. Predictive analytics models like time series forecasting detect emerging data quality issues, relying on historical data and algorithms like gradient boosting and deep learning to anticipate and prevent issues based on patterns and trends.

- AI-Driven Data Lifecycle Management (DLM): AI-driven DLM solutions employ predictive analytics and machine learning models to autonomously determine data retention, archiving, and disposal strategies. They analyze data access patterns, data sensitivity, and legal compliance requirements. Technologies like reinforcement learning and decision trees assist in making data lifecycle decisions based on cost-benefit analyses and predefined policies.

- Intelligent Data Governance: Advanced data governance systems use machine learning algorithms to automate policy enforcement. Predictive models, classification algorithms, and anomaly detection ensure compliance with regulatory and organizational rules. These systems combine rule-based and role-based access control with machine learning to adapt and enforce governance policies continuously.

- Data Cleaning and Enrichment: AI-driven data cleaning tools use techniques like data imputation, outlier detection, and data transformation. They enhance data by enriching it with information from external sources using techniques like entity recognition and knowledge graph construction. AI models like random forests and deep learning correct and enhance data quality.

- Enhanced Data Security: Cutting-edge data security employs machine learning for real-time threat detection. Algorithms like unsupervised learning, clustering, and behavioral analysis identify anomalies in data access, user behavior, and network traffic. Adaptive authentication and dynamic access control adapt security policies based on detected threats and evolving attack vectors, with AI-powered predictive threat modeling and real-time response integration.

- Data Accessibility and Sharing: Modern data management solutions prioritize data accessibility and secure sharing. They use AI for access control and data sharing policies to ensure authorized users can easily and securely access data. Machine learning algorithms play a role in dynamic access control, adapting permissions based on user behavior and context, facilitating collaboration while maintaining security and regulatory compliance.

- Data Privacy and Compliance Management: Advanced data management solutions incorporate AI and machine learning to automate data privacy and compliance management. They analyze data to identify sensitive information and enforce data protection regulations, such as GDPR or HIPAA. AI models, including natural language processing and pattern recognition, ensure that data is handled in accordance with legal requirements. These systems can anonymize, pseudonymize, or redact sensitive data automatically, assisting organizations in adhering to stringent data privacy and compliance standards.

In the ever-evolving landscape of data science and analytics, these advanced strategies for preparing your data for a Data Science Studio set you on a path of innovation and efficiency. They not only ensure that your data is ready for analysis but also position your organization as a thought leader in a data-driven world. By embracing these advanced tips, you’re equipped to harness the full potential of data in the studio, unleashing its transformative power on the energy sector and beyond.

The Data Dynamics Advantage

Data Dynamics’ Unified Unstructured Data Management Platform is a game-changer for energy companies seeking to optimize their data for Data Science Studios. It streamlines the entire data preparation process, offering automated data discovery and inventorying of data sources, ensuring data quality through advanced cleaning and quality assurance tools, and enabling seamless data integration and virtualization.

The platform places a strong emphasis on data security, compliance, and resilience, which are critical in the energy sector. It also tracks data lineage and offers version control for enhanced data management. AI-driven data cleansing and semantic integration make data more meaningful and accessible. This comprehensive solution empowers energy companies to efficiently prepare their data for advanced analytics, positioning them as thought leaders in the industry’s data-driven evolution. Trusted by over 28 Fortune 100 organizations, the platform encompasses four integral modules—Data Analytics, Mobility, Security, and Compliance—all encapsulated within a single software architecture. By centralizing data from various touchpoints and systems, simplifies the process of capturing, storing, and overseeing the data ecosystem with a single pane view.

In essence, the Data Dynamics Unified Data Management Platform is a potent tool for businesses seeking to optimize their data for a Data Science Studio. To know more about Data Dynamics, visit www.datadynamicsinc.com or connect with us at solutions@datdyn.com / (713)-491-4298.